First and foremost, read this: HTTP Live Streaming Overview

Update to the latest HTTP Streaming Tools (Disk Image), Login Required. I'am using the ones posted on 08 Jan 2010, they can be found in the Downloads & ADC Program Assets > iPhone section.

I was a bit set back, when the documentation states, that one has to deal with a delay of about 30 seconds. If you are looking for real-time streaming, there might be other solution.

Why 30 seconds? Apple recommends to segement your video stream into 10 second pieces, and it seems that 3 of them have to be loaded for streaming. Of course, the segement length can be changed, what would happen, if we change it to - say - 1 seconds? Live streaming closer to real-time?

Unfortunatly not. This seem to force the iPhone to make more connections to the server and pause the video more often than not it order to retrieve the next segment(s).

So, after installing the Segmenting Tools, they should be present at:

/usr/bin/mediastreamsegmenter

/usr/bin/mediafilesegmenter

/usr/bin/variantplaylistcreator

/usr/bin/mediastreamvalidator

I won't be too concerned with the other tools, and concentrate on getting a live stream to work. You guessed it, we need the mediastreamsegmenter for that.

Let's examine the basic workflow:

Video Source ➔ Segmenter ➔ Distribution

Distribution is done via an HTTP Server, that's the nice thing about this. No special server, no special port, just good, old HTTP. Not that Apple would give us that much choice, if it comes to streaming to the iPhone.

The Apple-provied segmenter is also not problem, other have been rolling their own. Go there, if you are adventurous.

Video Source

The biggest trouble for me was to set up the right video source. mediastreamsegmenter is quite picky in that way, and would only work with the right input.

A quick look at the mediastreamsegmenter man pages reveales the following.

-

it looking for an MPEG-2 transport stream either over UDP or over stdin.

-

it will only will only work with MPEG-2 Transport Streams as defined in ISO/IEC 14496-1. The transport stream must contain H.264 (MPEG-4, part 10) video and AAC or MPEG audio. If AAC audio is used, it must have ADTS headers. H.264 video access units must use Access Unit Delimiter NALs, and must be in unique PES packets.

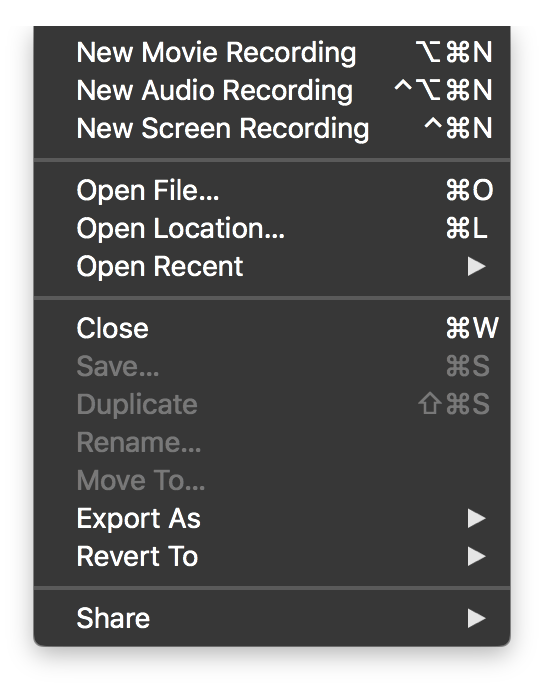

Apple's FAQ suggest to use some commerical hardware encoders, but if it's not that convinient to get your hands on one of there, there's also a software solution in the form of the all-mighty VLC. (The purists might forgive my usage of the GUI.)

Open a new Capture Device (⌘G), and select screen capture, specify size and and framerate at will. Check the streaming/serving box and proceed to the 'Settings...'

There you want to have a 'stream' transported via UDP, address should be 127.0.0.1 (if you mediastreamsegmenter is also on your local machine), any port not taken should do, let's take 50000.

The Transcoding options for Video should be h264, the bitrate can be choosen according to your desired quality.

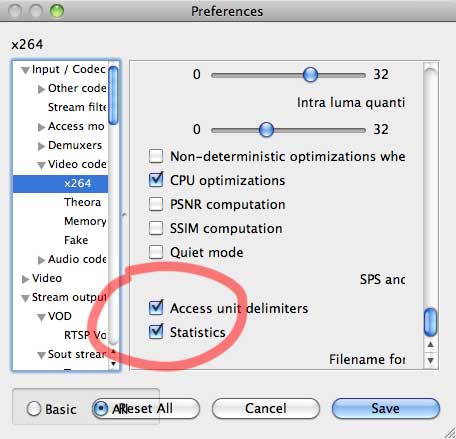

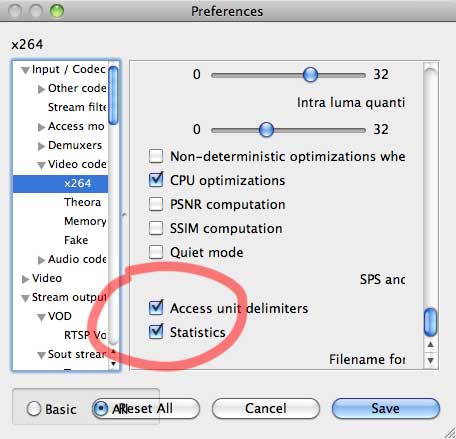

I though that was about it, but I was wrong. Remember, that the stream must use Access Unit Delimiter NALs? Well, here they are.

VLC > Preferences > Video, then click at the 'all' Radio Button at the left corner, which should give you a detailed list of video preferences. Select 'Input / Codecs' > Video codecs > x264, and scroll at the setting to the very bottom. (Yes, it took me a while to find that.)

Enable the Access Unit Delimiters.

Ok, so now we have a nicely encoded Video Steam at 127.0.0.1:50000

Segmenter

Onwards to the segmenter. Having a look at the mediastreamsegmenter example in the man again, we know that it needs the following command:

mediastreamsegmenter -b http://192.168.20.117/live -s 3 -t 10 -D -f /Library/WebServer/Documents/live 127.0.0.1:50000

A quick walkthrough:

-b http://192.168.20.117/live (also: -base-url) is where the .ts files are written and the stream can be accessed.

-s 3 (-sliding-window-entries) the number of files in the index file

-t 8 (-target-duration) we want 8 second slices (default: 10)

-D (-delete-files) delete .ts files if they are no longer needed

-f (-file-base) Directory to store the media and index files

...and last the IP-Address of our stream, in this case 127.0.0.1:5000

If everything worked, you should see the prog_index.m3u8 file and .ts files in the file base directory.

Distribution

The only thing left now is making a page, that serves the stream. This should not be too difficult. A simple page with the nice HTML5 video should be fine. I made this the index.html page, but of course any other is fine too.

<video width="320" height="240">

<source src="prog_index.m3u8" />

</video>

Ok, so now we know, how to do near real-time streaming to multiple clients, but what if you wanted to do real-time streaming to only a couple of WiFi-connected iPhone? That the topic for the next post.